Testing an Apple TV GUI with GPT-3 and Stb-tester

By David Röthlisberger. Tweet your comments @drothlis.

Published 13 Oct 2022.

I used Stb-tester to generate a textual representation of the Apple TV’s screen, fed that to GPT-3, and fed GPT-3’s response back to Stb-tester. It’s super flaky —I don’t need to worry about my job security just yet— but it’s pretty neat to watch when it does work. Here’s a quick demo:

Quick background about Stb-tester

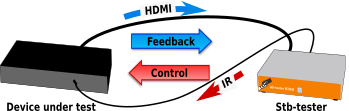

Stb-tester is a tool for testing set-top-boxes such as the Apple TV. It sends remote-control signals to the device-under-test, and captures its video output using an HDMI capture card. Stb-tester provides a Python API so that you can write test scripts that send keypresses, look for particular images on the screen, read the text on the screen using OCR, etc.

On top of these basic APIs, users are expected to define Python classes called “Page Objects” to provide a higher-level API about a particular page. For example, I already had some Page Objects for testing the “BT Sport” app: the Page Object for the “Menu” page provides a property called “selection” (which returns the text of the currently-selected menu entry) and a method called “select” (which navigates to the specified target and presses OK).

GPT-3

To hook this up to GPT-3 I wrote a simple loop that asks Stb-tester to detect the current page (it tries all the Page Objects in your git repo until one returns True for “is_visible”), and then it feeds the following information to GPT-3:

The Python repr of the current Page Object instance. This includes a list of its properties and their values. For example, this Page Object has one property:

<btsport.NavMenu(selection='Football')>A list of that page’s methods, i.e. the actions that you can do from that page.

I also tell GPT-3 about some actions that are valid from any page, such as

press(key_name)andlaunch_app(app_name).

Note that the underlying Page Object code is all written manually by a human. Here, GPT-3 is basically acting as a glorified GitHub Copilot, taking an English-language objective and deciding which Python function to call. The main difference from Copilot is that we’re feeding it live data (dynamic introspection of actual Python objects representing a live application-under-test), whereas Copilot works on static analysis without running any of your code.

My source code is here. This was heavily inspired by (copied from) Nat Friedman’s natbot, which uses Playwright to get HTML from the browser, then feeds a simplified version of the HTML to GPT-3. I think natbot has more potential because GPT-3 understands the HTML directly, whereas I’m still writing Python code manually to convert the screen’s pixels to a text representation that GPT-3 can understand.

Magic

The first few minutes felt like magic — how can it possibly know that?

From this input (plus my introductory instructions) it figured out that my navigate_to method takes a property name:

OBJECTIVE: Navigate to the Settings menu

CURRENT PAGE: <NavMenu(selection='Football')>

COMMANDS:

page.iterate_menu(property_name)

page.move_one(key)

page.navigate_to(**kwargs)

page.refresh(frame=None, **kwargs)

YOUR COMMAND:

page.navigate_to(selection='Settings')

(Later experiments suggest that “guessed” would be more accurate than “figured out”. Still, it guessed correctly.)

Even though this is the general-purpose “Davinci” model, not the “Codex” model used by GitHub Copilot, it still knows a crazy amount of Python:

OBJECTIVE: Print the title of the movie to the right of "Godzilla vs. Kong"

CURRENT PAGE: <appletv.Carousel(name='Top Movies', title='Godzilla vs. Kong', index=2)>

COMMANDS:

page.get_selected_thumbnail()

page.get_screenshot()

page.iterate_tiles()

page.open_details_page()

press(key_name)

HISTORY:

<appletv.Carousel(name='Top Movies', title='Superman 3', index=1)> : press("KEY_RIGHT")

YOUR COMMAND:

print(page.iterate_tiles().next().title)

Note that the information above (plus the introductory instructions in my prompt) is all the model is given — it hasn’t seen the actual source code of iterate_tiles, it’s just guessing how to use it from the name.

I didn’t need to provide any examples in my prompt, presumably because it already knows a lot about Python. (I tried, and the examples didn’t make any difference. Or rather I started with some examples in my prompt, and when I removed them it continued working just as well.)

It can issue commands within a longer sequence of actions, as you saw in the demo video above. You need to call it multiple times, and tell it about the previous steps that it already did (more on this in “State”, below).

Frustration

All of the results I’ve mentioned so far, including what you saw in the video, took literally one morning to put together. All of the examples in this article are real responses (albeit cherry-picked — not all attempts were equally successful).

Then I spent the next 2 days trying all sorts of prompts to get it to do more complicated things, and I failed miserably. My initial excitement wore off pretty quickly. You spend hours trying to get it to do some particular task, and your changes cause regressions in tasks it used to be able to do. It’s very frustrating and unfulfilling because the time you spend doesn’t necessarily translate into a deeper understanding of the model.

As I mentioned earlier, the model knows a lot about Python. I spent hours trying to get it to issue press(key_name) commands, until I realised my prompt said “You are given […] The valid commands that you can issue from the current page, as Python method signatures”. I changed “method” to “function” and voilà — it started issuing press commands occasionally. Or did it? Did I just get lucky? Was it some other, minor change to the prompt, or the randomness in the model? Even when I turned the “temperature” (randomness) all the way down to 0 I’d still get different responses for the same input, so it’s difficult to do controlled experiments.

State

Each time you call OpenAI’s GPT-3 API you feed it an input “prompt” and you get a response back (in our case, a snippet of Python code because that’s what our prompt asked for). That’s it. There’s no “session”, no state preserved between API requests. But we can preserve some state by putting it into the prompt!

I added this sentence to my prompt:

You are given:

[...]

4. The previous pages you saw and the commands you issued to get to

this page (in the order seen/issued, i.e. most recent last).And then I list the history, like this example taken from the 6th iteration of a real run, after it had already issued 5 commands:

OBJECTIVE: Open the BT Sport app, go to the Settings menu and check whether I'm logged in

CURRENT PAGE: <btsport.Settings(entries=['Legal Information', 'Log in'])>

COMMANDS:

page.refresh(frame=None, **kwargs)

HISTORY:

<appletv.Home(selection=Selection(text='Photos'))> : launch_app("BT Sport")

<btsport.NavMenu(selection='BT SPORT')> : page.select("Settings")

<btsport.Settings(entries=['Legal Information', 'Log in'])> : launch_app("BT Sport")

<btsport.Settings(entries=['Legal Information', 'Log in'])> : print(page.entries)

<btsport.Settings(entries=['Legal Information', 'Log in'])> : print(page.entries)

YOUR COMMAND:

assert "Log in" in page.entries

In each iteration through the main loop, my script appends the previous page & command to the HISTORY list, and calls GPT-3 again with the original objective, the new page and its methods, and the history.

Did this help? Definitely. It was unable to complete the multi-step task without it. But now I can’t be 100% sure that it isn’t confusing “HISTORY” for “COMMANDS”, at least some of the time — it can get stuck repeating the same command over and over, perhaps because it’s now appearing several times in the prompt. I tried cranking the “frequency penalty” up high but it didn’t help.

No “mental model” of the application under test

I tried to get it to navigate semi-randomly by pressing up/down/left/right/ok, and learn about the relationship between the different pages it landed on. It didn’t work at all. Then I simplified the task to learning the layout of an on-screen keyboard, but I couldn’t get that to work either.

Then I simplified the task even further to see if it can figure out a simple grid like this:

a b c

d e fThis is my input, and the model’s response:

You are an agent controlling the on-screen keyboard of

a GUI application using a remote control. The keys are

laid out in a regular, rectangular grid.

To navigate from "a" to "b", press "right".

To navigate from "b" to "c", press "right".

To navigate from "a" to "d", press "down".

To navigate from "b" to "e", press "down".

To navigate from "c" to "f", press "down".

What do you need to press to navigate from "b" to "f"?

List each step of the navigation, showing the starting

key, what you pressed, and the key you landed on.

From "b", press "right" to land on "c".

From "c", press "right" to land on "d".

From "d", press "down" to land on "e".

From "e", press "down" to land on "f".

As you can see I tried every trick I’d read about on twitter: Particularly telling it to “show its work” and break down the answer into separate steps. Without that, the answers are always wrong. With it, they’re only sometimes right.

So it seems GPT-3 can’t reason about spatial relationships or directed graphs? Sometimes you think it’s working but you try a slightly different question (“b to f” instead of “a to f”) and it all comes crumbling down.

Other times it surprises you — here it inferred transitions that I didn’t explicitly tell it about:

From "f" to "a":

Press "left" to go from "f" to "e".

Press "left" to go from "e" to "d".

Press "up" to go from "d" to "a".

Cost

OpenAI charges USD$0.02 per 1,000 tokens. Each of my requests used about that, 1,000 tokens (here’s my full prompt again). If you give my script free rein to run without human interaction, in an effort to get GPT-3 to explore and learn some kind of model of the application under test… well, you can very quickly use up your $18 free trial credit.

Future work

This particular use of GPT-3 isn’t very promising — it’s too flaky, unpredictable, and not clever enough for anything but the most trivial tasks. Having said that, I wouldn’t mind trying the same experiment with the “Codex” model, which is specifically designed to output Python code. Codex is the model that Copilot uses, but it isn’t available via the API yet (there’s a closed beta with a waiting list).

Nevertheless, I’m bullish on Machine Learning for improving the state of the art in GUI testing. Particularly in 3 areas:

Describing the state of the application under test: Image classification and segmentation are mature applications of Machine Learning that are particularly relevant to Stb-tester, and we envision tools that make it easier to write new Page Objects, or do away with a lot of Page Object code altogether!

Automated test-case generation: In practice I think this would look like a search-based exploration, building up a model of the application under test, and then comparing it against an older model to detect changes in behaviour. This exploration could even leverage the Page Objects you’ve already written.

Oracles (i.e. how to tell if the behaviour is OK or a bug): My main idea is the model comparison I mentioned in the previous bullet point (i.e. characterisation testing). Maybe anomaly detection algorithms could be useful?

What’s most exciting is that I can see a clear path forward to apply Machine Learning incrementally in any of these areas to start delivering valuable tooling.